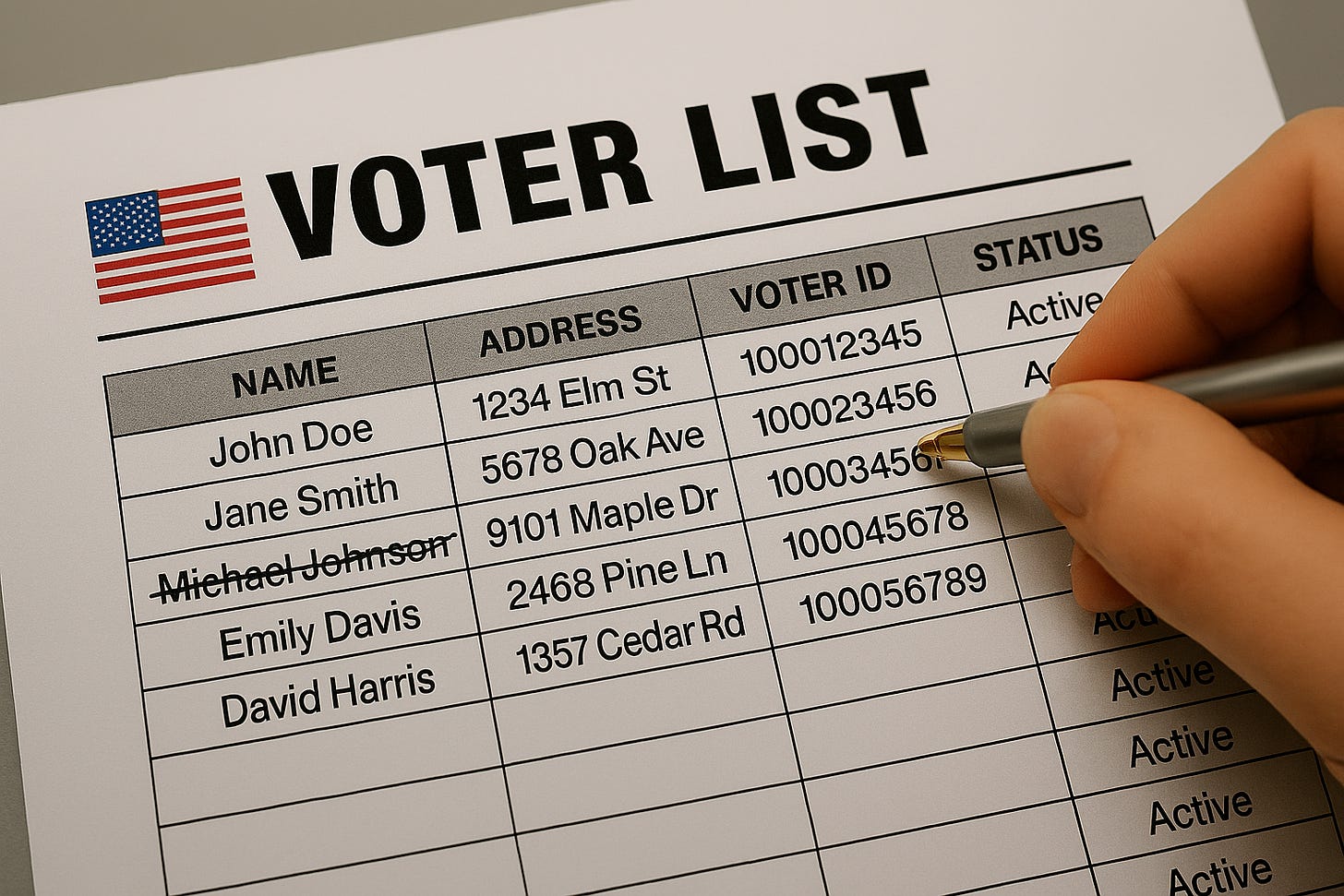

Evaluating voter list integrity

What information do you need to determine if you've got high quality voter registration data?

As we celebrate National Voter Registration Day, I thought that I’d take a bit of a dive into how we’ve been studying the integrity of voter list data, without using highly sensitive personally identifying information.

What information and methods can be used to evaluate the quality of voter registration data, especially if you as the analyst don’t want to be liable for securely handling identifiers like Social Security Numbers or state ID numbers?

The short answer, based on the science we have done, and stripped of the drama, is you can do a thorough and sophisticated evaluation of voter registration data using only the public release data. You don’t need access to highly sensitive personally-identifying information to evaluate a voter list’s integrity.

So shifting to the drama, recently the U.S. Department of Justice (DOJ) has been requesting voter registration data from states and counties, apparently as part of an effort to examine the quality of voter registration data. Orange County, California has recently been sued by the DOJ to get access to data on records removed from the county’s voter rolls prior to the 2020 election due to ineligibility. Orange County provided the requested records, but didn’t pass along highly sensitive information to the DOJ (like social security and state identification numbers, digital records of signatures, and other record numbers).

Seems that DOJ has taken issue with these data redactions, and according to a recent story in the LA Times, “The county’s lawyers offered to produce the redacted information as long as it was under a protective order or confidentiality agreement that would permit the federal government to use the data only for election enforcement purposes.”

So, I got to thinking about this issue a bit, because I’ve been collaborating with the Orange County Registrar of Voters for about seven years now. You read that right, since 2018 which is SEVEN YEARS, and which might be the longest-standing election official-academic collaboration of all time! Also, this is probably the only election official-academic collaboration that has existed in a election jurisdiction under two different Registrar of Voters (originally Neal Kelley, now Bob Page).

One of the primary aspects of our collaborative research project is the quantitative analysis of the accuracy and integrity of voter registration data in Orange County. So I’ve come to know quite a bit about Orange County voter registration data and we’ve also done quite a bit of research developing quantitative tools to establish the integrity of voter registration data — especially Orange County’s voter registration data.

The basic dataset that we work with is a “snapshot” of the jurisdiction’s voter file; the dataset as it existed at a particular moment in time. For many of our applications, we use daily snapshots (like with our Orange County studies), others use weekly or monthly data. It usually depends on the size of the voter file, as bigger jurisdictions (like the state of California) are cumbersome to deal with computationally on a daily basis so in those situations we use weekly snapshots.

We then accumulate snapshots over time, well before any upcoming jurisdiction-wide election. We do that because we want to get some baseline analyses done during periods before significant voter file maintenance occurs and before significant voter mobilization efforts occur. Ideally in California, we’d start in the fall of odd-numbered years, to get that baseline established before the June primaries and then collect data and run our analyses through the end of the calendar year, covering the fall general election and the post-election canvass period.

Once we have accumulated a reasonable number of snapshots, we get to work.

For those who care about the messy details, we use a long-established methodology which in general is called “record linkage”. It’s also called “matching” in some academic disciplines and applications, and in industry, they often call this methodology “entity resolution.” More specifically, we typically use probabilistic record linkage, deploying general algorithms that have been used for decades (like the workhorse Fellegi and Sunter [1969] algorithm).

The first analysis looks at each snapshot in isolation. We use probabilistic record linkage to scan each individual snapshot for potential duplicate records based on information in the file, usually name, registration address, and date of birth. Those potential duplicate records get flagged and shared in a report that we send to the election official whose data we are working with.

The next analysis gets a bit more complicated. We scan pairs of snapshots to look for records that otherwise match, but which have some information in at least one of the fields that has changed. For example, this analysis flags records where there has been a name change, an address change, or where the record’s party registration has changed. We then produce a report that provides many metrics about the record changes, for example:

We can provide a time-series graph showing the rate of record changes in different database fields across the span of snapshots we analysis.

We can show geographies that have higher or lower rates of record change in specific fields, for example, precincts with high rates of party registration change.

We can also provide a list of the records back to the election official showing records that have frequent changes, or changes that we deem anomalous.

These analyses and reports are very useful to election officials. They provide data on the quality of their voter registration data, for example, the rates of potential duplicate records and on anomalous record changes. These reports can be helpful in detecting and resolving issues that arise in complex administrative data, where multiple state or local agencies have access to the data. And importantly, these reports are helpful to keep an eye out for unauthorized access to mission-critical voter registration data, as some of the types of issues that these analysis identify might be the very record or file changes a hacker might introduce in the data.

It’s important to add that we have a variety of peer reviewed publications discussing these methods and their application to voter registration data quality. A very early paper published the preliminary sketch of these ideas back in 2009, reporting on a pilot project in Washington and Oregon. A more detailed and sophisticated version of this approach was published in American Politics Quarterly in 2019 (preprint here). We published a subsequent paper that extended these methods to statewide voter list data, using a Bayesian method that helps better identify anomalous records (published in Statistics, Politics and Policy in 2022). Code to implement these methods discussed in the 2019 and 2022 papers is available (just follow the links provided in the papers). We have more recently developed and tested a new approach for probabilistic record linkage, using GPUs, that vastly improves the computational speed for these methods in very large state databases (FAST-ER).

We also published a book, Securing American Elections, that digs into analyzing voter list data quality, in addition to using other methods, to gauge election integrity.

What did we learn? Crucially, we found that thorough and sophisticated voter registration data list quality analyses can be done without using sensitive personally-identifying information. That is, we can dig deeply into the analysis of voter list integrity using just the information in the public-release file, we do not need to know record information like Social Security Numbers, state identification numbers, or any of those identifiers.

How can we do this? We accomplish this using two innovations. One innovation is the use of probabilistic record linkage on the public release data. Take the example of the identification of duplicate records. If we had the PII information (like Social Security Numbers or state identification numbers), we could easily and quickly identify duplicate records. With the public release data, using only name, street address, and date of birth, we can identify the superset of potential duplicates, in other words, the set of records that are in fact duplicates as well as the set of records that are quite similar but are not actual duplicate records. We can then pass those lists over to the election officials who can then use the PII information to identify the actual duplicate records.

And that points out the second innovation — by working directly and in collaboration with the election official providing the data we can help them improve the quality of their voter lists (without the PII).

So important lessons here. It is possible to use existing quantitative methodologies, which are widely used in many applications, to identify records in voter lists that might need additional scrutiny. It’s even possible to do this really quickly with our GPU-enabled probabilistic record linkage tools. But the second lesson is that collaboration with election officials is crucial for determining which records might be problematic, why they might be problematic, and what to do about those records.

But will this methodology detect deliberate and malicious attempts at voter registration fraud? As we have argued in our published work, this methodology is suited for detecting anomalous record changes and record duplications in a voter file, especially those that would result from a larger-scale attack or failure of technology. But as we have seen recently in Orange County, if someone attempts to commit voter registration fraud, illegally adding a single record, that would be difficult for any anomaly or fraud detection methodology to reveal. But this is why there are other safeguards in place, in particular the requirement for those newly registered, who did not provide identification when they originally registered, to show identification the first time they try to obtain and cast a ballot in a federal election. That safeguard apparently worked in this instance, and the person who allegedly committed election fraud in this case is being prosecuted.

I’ll have more to say about fraud detection in future essays.

The bottom line is that collaboration, not confrontation and drama, is what it takes to make sure that our elections data are security, accurate, and have great integrity.